Why 70% of Supply Chain AI Agent Pilots Underperform — And How to Fix Them in 2026

The supply chain industry is deep into its AI agent era — and the results are sobering. According to Gartner, organizations will abandon 60% of AI projects unsupported by AI-ready data through 2026. Meanwhile, only 23% of supply chain organizations even have a formal AI strategy. The gap between conference demos and operational reality has never been wider.

The Hype-Reality Gap

At industry events, AI agents are shown rebalancing inventory, re-planning production, and resolving exceptions autonomously. But as Supply Chain Management Review recently reported, private conversations with supply chain leaders reveal a starkly different picture: pilots that generated recommendations but failed to change outcomes. When demand spikes hit, suppliers missed dates, or schedules slipped, planners reverted to spreadsheets and judgment calls. The agent was visible but no longer decisive.

This isn't an isolated anecdote. Gartner's 2025 survey found that while 72% of supply chain organizations have deployed generative AI, most report middling results for productivity and ROI. The problem isn't the technology — it's how organizations deploy it.

The Five Failure Patterns

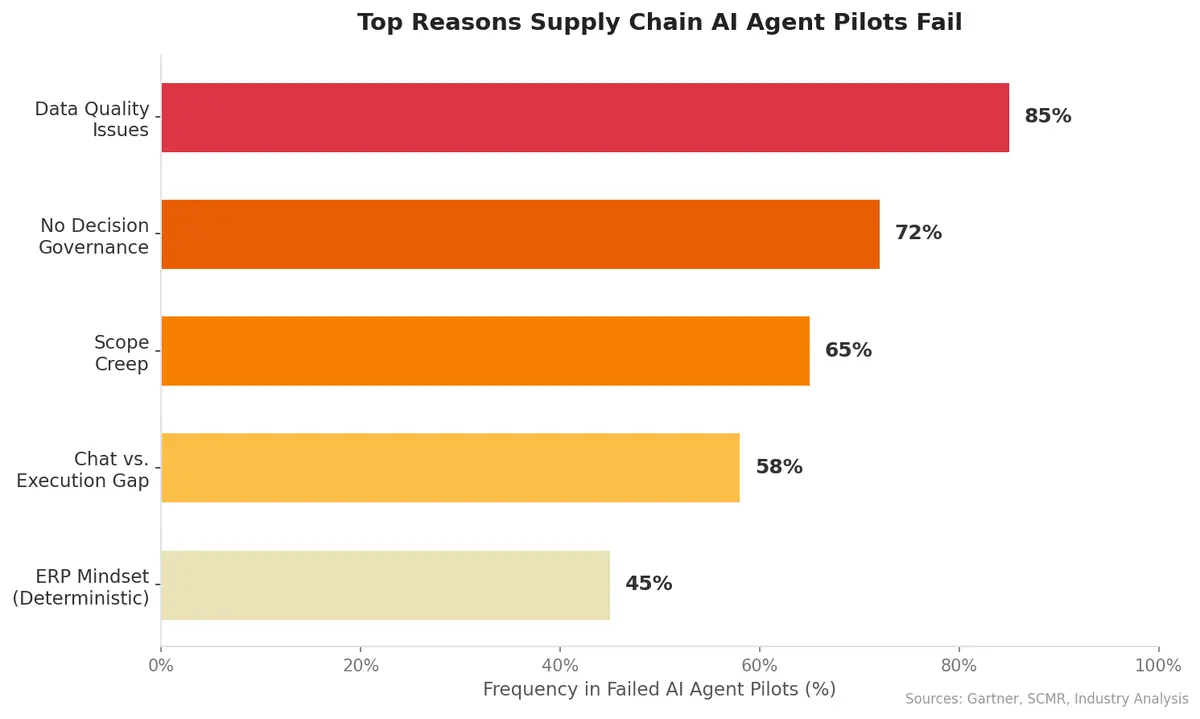

After analyzing industry reports and deployment case studies, a clear taxonomy of AI agent pilot failures emerges:

1. The Autonomy Illusion

Many pilots launch with the assumption that full end-to-end autonomy is the natural destination. In practice, supply chain decisions involve tradeoffs across cost, service level, and risk that shift daily. Pilots that showed promise focused on narrower decision points — prioritizing which purchase orders to expedite when capacity tightened, or flagging inventory imbalances early enough for human intervention.

2. The ERP Mindset

Organizations treat AI agents like ERP transactions: deterministic, reproducible, predictable. But agents are probabilistic systems. The same input can produce different outputs depending on context windows, model updates, and data freshness. Teams that expect ERP-like consistency set themselves up for disillusionment.

3. Data Quality Denial

Gartner's research is unambiguous: 85% of AI projects fail due to poor data quality. A pilot runs beautifully on a clean, curated dataset. Production reality means incomplete supplier records, lagging inventory counts, and conflicting demand signals from multiple channels. Organizations that don't invest in data readiness before agent deployment are building on sand.

4. Missing Decision Governance

Which decisions does the agent own? Under what conditions? With what tolerance for uncertainty? Most failed pilots launched without answering these questions. When an agent recommends reallocating $2 million in inventory and no one knows whether it has authority to execute, the recommendation dies in an email thread.

5. Chat vs. Execution Confusion

Perhaps the most fundamental error: confusing a recommendation engine with an execution agent. As SCMR notes, the distinction between "chat" (generating suggestions) and "execution" (taking action within defined parameters) is where most organizations stall. An agent that tells a planner what to do is a chatbot with a supply chain skin. An agent that executes within governed boundaries is an operational asset.

What Success Actually Looks Like

Not every deployment fails. Hershey's recent partnership with Aera Technology demonstrates what right-sized AI agent deployment looks like. Rather than pursuing full autonomy, Hershey deployed autonomous agent teams that self-assemble for specific decisions, with dedicated learning and governance agents to optimize policies and enforce compliance. The key differentiator: multidimensional simulation integrated with agentic AI, allowing the system to model consequences before acting.

McKinsey data reinforces this pattern. Among companies successfully implementing AI in supply chain operations, 41% report 10–19% cost reductions — but these gains come from focused deployments with clear decision boundaries, not ambitious end-to-end automation attempts.

A Framework for Right-Sizing AI Agent Scope

Organizations ready to move beyond pilot purgatory should apply a four-step framework:

Define the decision boundary. Identify specific, repeatable decisions the agent will own. "Optimize our supply chain" is not a decision boundary. "Recommend expedite actions for purchase orders when supplier lead time exceeds committed date by more than 3 days" is.

Establish governance thresholds. Set clear financial and operational limits. Below $50K impact, the agent executes autonomously. Between $50K and $500K, it recommends with human approval. Above $500K, it escalates with full scenario analysis.

Invest in data readiness first. Audit the data feeds the agent will consume. If supplier on-time delivery data is updated weekly instead of daily, the agent's recommendations will be stale before they're acted upon. Fix the data pipeline before deploying the agent.

Measure outcomes, not activity. The metric isn't "number of recommendations generated." It's "percentage of recommendations that changed an operational outcome." If planners override the agent 90% of the time, the agent isn't working — regardless of how sophisticated its model is.

Human-in-the-Loop Is Not a Weakness

The industry narrative positions human oversight as a temporary crutch on the path to full autonomy. That framing is counterproductive. The most effective supply chain AI deployments treat human-in-the-loop as a permanent architectural feature, not a transitional phase.

This doesn't mean humans review every decision. It means the system is designed with escalation paths, exception handling, and feedback loops that improve agent performance over time. The agent learns from human overrides rather than treating them as failures.

Moving Forward

The 60% abandonment rate Gartner predicts isn't inevitable. Organizations that define narrow decision boundaries, invest in data quality, establish clear governance, and measure operational outcomes rather than model accuracy will be the ones that break through pilot purgatory.

The question isn't whether AI agents belong in supply chain operations. They do. The question is whether your organization has the discipline to deploy them as operational tools rather than technology demonstrations.

CXTMS embeds AI agents with human-in-the-loop guardrails, governed decision boundaries, and real-time data integration — turning pilot projects into production systems. Contact us for a demo.